THE PERPETUAL LINE-UP

UNREGULATED POLICE FACE RECOGNITION IN AMERICA

www.perpetuallineup.org

OCTOBER 18, 2016

THE

PERPETUAL

LINE-UP

UNREGULATED POLICE FACE RECOGNITION IN AMERICA

Clare Garvie, Associate

Alvaro M. Bedoya, Executive Director

Jonathan Frankle, Sta Technologist

RESEARCH

Moriah Daugherty, Research Assistant

Katie Evans, Associate

Edward J. George, Chief Research Assistant

Sabrina McCubbin, Research Assistant

Harrison Rudolph, Law Fellow

Ilana Ullman, Google Policy Fellow

Sara Ainsworth, Research Assistant

David Houck, Research Assistant

Megan Iorio, Research Assistant

Matthew Kahn, Research Assistant

Eric Olson, Research Assistant

Jaime Petenko, Research Assistant

Kelly Singleton, Research Assistant

DESIGN

Rootid

www.perpetuallineup.org

OCTOBER 18, 2016

WALT WHITMAN

Writing and talk do not prove me,

I carry the plenum of proof and every thing else in my face…

I. EXECUTIVE SUMMARY 1

A. Key Findings 2

B. Recommendations 4

II. INTRODUCTION 7

III. BACKGROUND 9

A. What is Face Recognition Technology? 9

B. e Unique Risks of Face Recognition 9

C. How Does Law Enforcement Use Face Recognition? 10

D. Our Research 12

IV. A RISK FRAMEWORK FOR LAW ENFORCEMENT FACE RECOGNITION 16

A. Risk Factors 16

B. Risk Framework 17

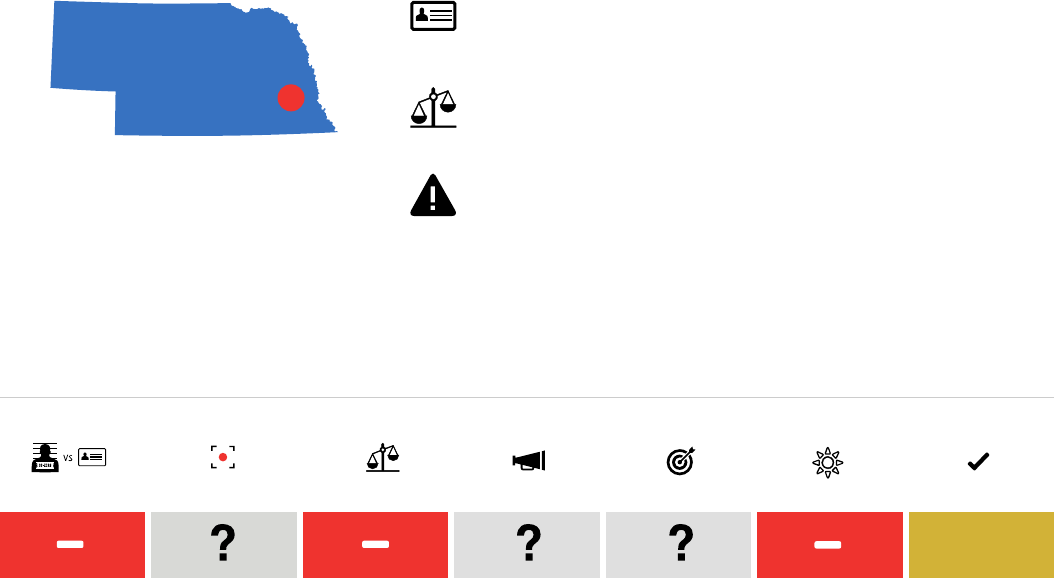

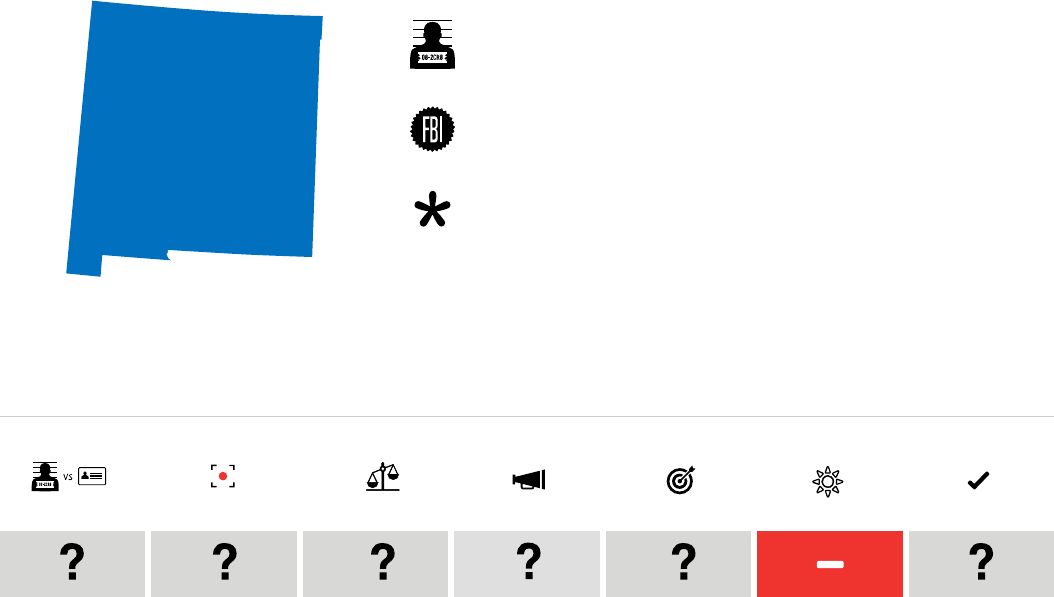

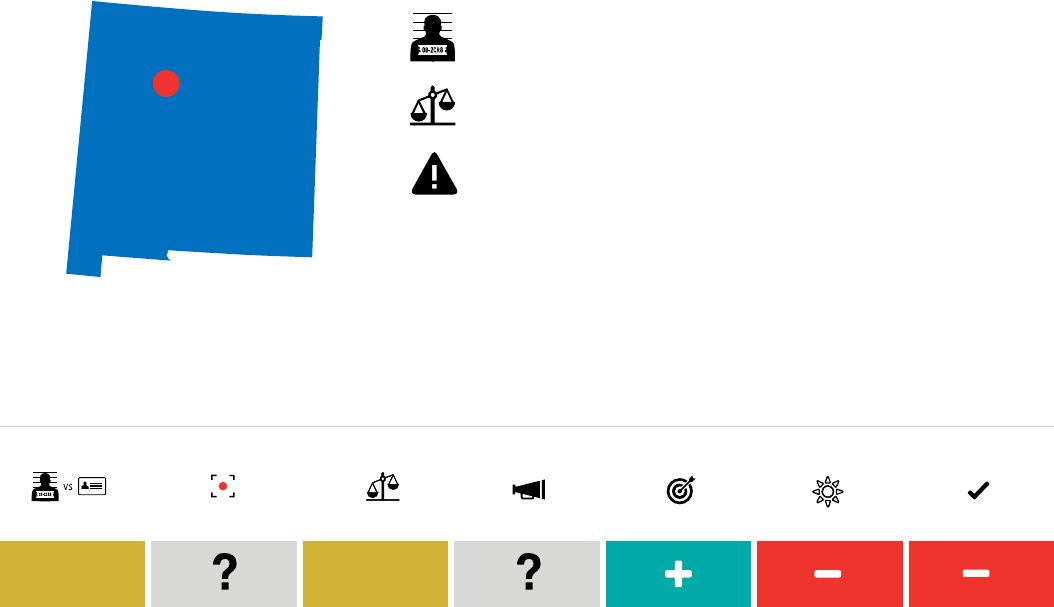

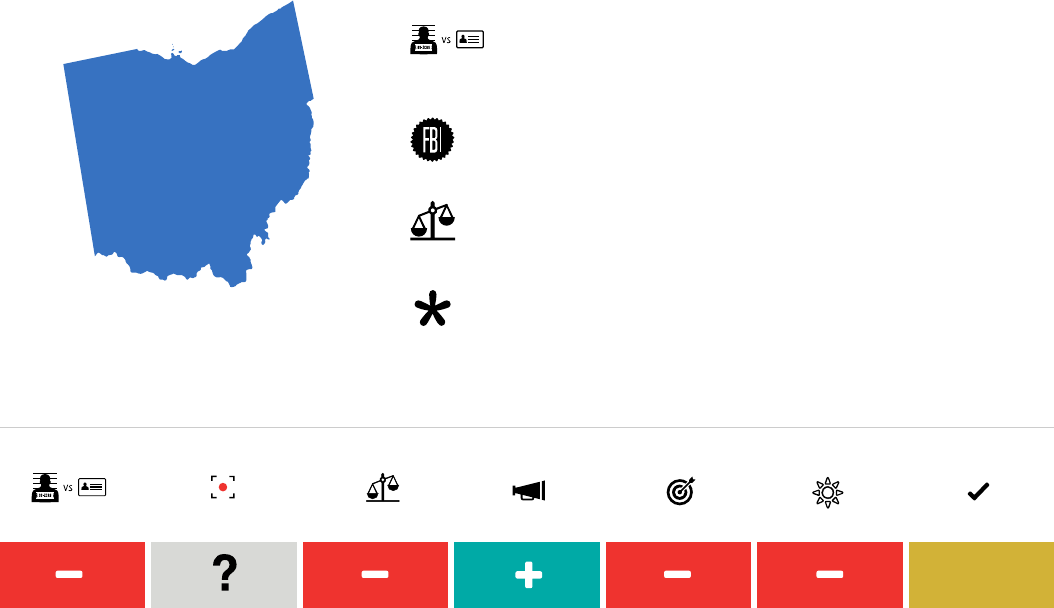

V. FINDINGS & SCORECARD 23

A. Deployment 23

B. Fourth Amendment 31

C. Free Speech 41

D. Accuracy 46

E. Racial Bias 53

F. Transparency & Accountability 58

VI. RECOMMENDATIONS 62

A. Legislatures 62

B. Law Enforcement 65

C. e National Institute for Standards & Technology 68

D. Face Recognition Companies 69

E. Community Leaders 70

VII. CONCLUSION 72

VIII. ACKNOWLEDGEMENTS 74

IX. ENDNOTES 75

X. METHODOLOGY 93

XI. MODEL FACE RECOGNITION LEGISLATION 102

XII. MODEL POLICE FACE RECOGNITION USE POLICY 116

XIII. CITY & STATE BACKGROUNDERS 120

TABLE OF CONTENTS

1

ere is a knock on your door. It’s the police.

ere was a robbery in your neighborhood. ey

have a suspect in custody and an eyewitness. But

they need your help: Will you come down to the

station to stand in the line-up?

Most people would probably answer “no.” is

summer, the Government Accountability Oce

revealed that close to 64 million Americans do

not have a say in the matter: 16 states let the

FBI use face recognition technology to compare

the faces of suspected criminals to their driver’s

license and ID photos, creating a virtual line-up

of their state residents. In this line-up, it’s not a

human that points to the suspect—it’s an algorithm.

But the FBI is only part of the story. Across the

country, state and local police departments are

building their own face recognition systems,

many of them more advanced than the FBI’s.

We know very little about these systems. We

don’t know how they impact privacy and civil

liberties. We don’t know how they address

accuracy problems. And we don’t know how any

of these systems—local, state, or federal—aect

racial and ethnic minorities.

is report closes these gaps. e result of a year-

long investigation and over 100 records requests

to police departments around the country, it is

the most comprehensive survey to date of law

enforcement face recognition and the risks that

it poses to privacy, civil liberties, and civil rights.

Combining FBI data with new information we

obtained about state and local systems, we nd

that law enforcement face recognition aects

over 117 million American adults. It is also

unregulated. A few agencies have instituted

meaningful protections to prevent the misuse

of the technology. In many more cases, it is out of

control.

One in two American adults

is in a law enforcement face

recognition network.

e benets of face recognition are real. It

has been used to catch violent criminals and

fugitives. e law enforcement ocers who use

the technology are men and women of good

faith. ey do not want to invade our privacy or

create a police state. ey are simply using every

tool available to protect the people that they are

sworn to serve. Police use of face recognition is

inevitable. is report does not aim to stop it.

Rather, this report oers a framework to reason

through the very real risks that face recognition

creates. It urges Congress and state legislatures

to address these risks through commonsense

regulation comparable to the Wiretap Act. ese

reforms must be accompanied by key actions

by law enforcement, the National Institute

of Standards and Technology (NIST), face

recognition companies, and community leaders.

I. EXECUTIVE SUMMARY

2

The Perpetual Line-Up

A. KEY FINDINGS

Our general ndings are set forth below. Specic

ndings for 25 local and state law enforcement

agencies can be found in the City & State

Backgrounders (p. 120). Our Face Recognition

Scorecard (p. 24) evaluates these agencies’ impact

on privacy, civil liberties, civil rights, transparency

and accountability. e records underlying all of

our conclusions are available online.

1. Law enforcement face recognition

networks include over 117 million

American adults—and may soon include

many more. Face recognition is neither new

nor rare. FBI face recognition searches are

more common than federal court-ordered

wiretaps. At least one out of four state or

local police departments has the option to

run face recognition searches through their

or another agency’s system. At least 26

states (and potentially as many as 30) allow

law enforcement to run or request searches

against their databases of driver’s license and

ID photos. Roughly one in two American

adults has their photos searched this way.

2. Dierent uses of face recognition

create dierent risks. is report oers

a framework to tell them apart. A face

recognition search conducted in the eld

to verify the identity of someone who has

been legally stopped or arrested is dierent,

in principle and eect, than an investigatory

search of an ATM photo against a driver’s

license database, or continuous, real-time

scans of people walking by a surveillance

camera. e former is targeted and public.

e latter are generalized and invisible.

While some agencies, like the San Diego

Association of Governments, limit

themselves to more targeted use of the

technology, others are embracing high and

very high risk deployments.

3. By tapping into driver’s license databases,

the FBI is using biometrics in a way

it’s never done before. Historically, FBI

ngerprint and DNA databases have

been primarily or exclusively made up

of information from criminal arrests or

investigations. By running face recognition

searches against 16 states’ driver’s license

photo databases, the FBI has built a

biometric network that primarily includes

law-abiding Americans. is is unprecedented

and highly problematic.

4. Major police departments are exploring

real-time face recognition on live

surveillance camera video. Real-time

face recognition lets police continuously

scan the faces of pedestrians walking by a

street surveillance camera. It may seem like

science ction. It is real. Contract documents

and agency statements show that at least

ve major police departments—including

agencies in Chicago, Dallas, and Los

Angeles—either claimed to run real-time

face recognition o of street cameras, bought

technology that can do so, or expressed

an interest in buying it. Nearly all major

face recognition companies oer real-time

software.

5. Law enforcement face recognition is

unregulated and in many instances out

of control. No state has passed a law

comprehensively regulating police face

recognition. We are not aware of any

agency that requires warrants for searches

or limits them to serious crimes. is

has consequences. e Maricopa County

Sheri ’s Oce enrolled all of Honduras’

driver’s licenses and mug shots into its

database. e Pinellas County Sheri ’s

Oce system runs 8,000 monthly searches

on the faces of seven million Florida

3

drivers—without requiring that ocers have

even a reasonable suspicion before running

a search. e county public defender reports

that the Sheri ’s Oce has never disclosed

the use of face recognition in Brady evidence.

6. Most law enforcement agencies are not

taking adequate steps to protect free

speech. ere is a real risk that police face

recognition will be used to stie free speech.

ere is also a history of FBI and police

surveillance of civil rights protests. Of the

52 agencies that we found to use (or have

used) face recognition, we found only one,

the Ohio Bureau of Criminal Investigation,

whose face recognition use policy expressly

prohibits its ocers from using face

recognition to track individuals engaging in

political, religious, or other protected free

speech.

7. Most law enforcement agencies do little

to ensure that their systems are accurate.

Face recognition is less accurate than

ngerprinting, particularly when used in

real-time or on large databases. Yet we found

only two agencies, the San Francisco Police

Department and the Seattle region’s South

Sound 911, that conditioned purchase of the

technology on accuracy tests or thresholds.

ere is a need for testing. One major face

recognition company, FaceFirst, publicly

advertises a 95% accuracy rate but disclaims

liability for failing to meet that threshold in

contracts with the San Diego Association of

Governments. Unfortunately, independent

accuracy tests are voluntary and infrequent.

8. e human backstop to accuracy is non-

standardized and overstated. Companies and

police departments largely rely on police ocers

to decide whether a candidate photo is in fact a

match. Yet a recent study showed that, without

specialized training, human users make the

wrong decision about a match half the time. We

found only eight face recognition systems where

specialized personnel reviewed and narrowed

down potential matches. e training regime

for examiners remains a work in progress.

9. Police face recognition will

disproportionately aect African

Americans. Many police departments do

not realize that. In a Frequently Asked

Questions document, the Seattle Police

Department says that its face recognition

system “does not see race.” Yet an FBI co-

authored study suggests that face recognition

may be less accurate on black people. Also,

due to disproportionately high arrest rates,

systems that rely on mug shot databases likely

include a disproportionate number of African

Americans. Despite these ndings, there is no

independent testing regime for racially biased

error rates. In interviews, two major face

recognition companies admitted that they did

not run these tests internally, either.

Face recognition may be

least accurate for those it is

most likely to aect:

African Americans

10. Agencies are keeping critical information

from the public. Ohio’s face recognition

system remained almost entirely unknown

to the public for ve years. e New York

Police Department acknowledges using face

recognition; press reports suggest it has an

advanced system. Yet NYPD denied our

records request entirely. e Los Angeles

Police Department has repeatedly announced

4

The Perpetual Line-Up

new face recognition initiatives—including a

“smart car” equipped with face recognition and

real-time face recognition cameras—yet the

agency claimed to have “no records responsive”

to our document request. Of 52 agencies, only

four (less than 10%) have a publicly available

use policy. And only one agency, the San

Diego Association of Governments, received

legislative approval for its policy.

11. Major face recognition systems are not

audited for misuse. Maryland’s system,

which includes the license photos of over

two million residents, was launched in 2011.

It has never been audited. e Pinellas

County Sheri ’s Oce system is almost 15

years old and may be the most frequently

used system in the country. When asked if

his oce audits searches for misuse, Sheri

Bob Gualtieri replied, “No, not really.”

Despite assurances to Congress, the FBI

has not audited use of its face recognition

system, either. Only nine of 52 agencies

(17%) indicated that they log and audit

their ocers’ face recognition searches for

improper use. Of those, only one agency,

the Michigan State Police, provided

documentation showing that their audit

regime was actually functional.

B. RECOMMENDATIONS

1. Congress and state legislatures should

pass commonsense laws to regulate law

enforcement face recognition. Such laws

should require the FBI or the police to have a

reasonable suspicion of criminal conduct prior

to a face recognition search. After-the-fact

investigative searches—which are invisible to

the public—should be limited to felonies.

Mug shots, not driver’s license and ID

photos, should be the default photo

databases for face recognition, and they

should be periodically scrubbed to eliminate

the innocent. Except for identity theft and

fraud cases, searches of license and ID

photos should require a court order issued

upon a showing of probable cause, and

should be restricted to serious crimes. If

these searches are allowed, the public should

be notied at their department of motor

vehicles.

If deployed pervasively on surveillance video

or police-worn body cameras, real-time face

recognition will redene the nature of public

spaces. At the moment, it is also inaccurate.

Communities should carefully weigh whether

to allow real-time face recognition. If they do,

it should be used as a last resort to intervene

in only life-threatening emergencies. Orders

allowing it should require probable cause,

specify where continuous scanning will occur,

and cap the length of time it may be used.

Real-time face recognition

will redene the nature of

public spaces. It should be

strictly limited.

Use of face recognition to track people on

the basis of their political or religious beliefs

or their race or ethnicity should be banned.

All face recognition use should be subject to

public reporting and internal audits.

To lay the groundwork for future

improvements in face recognition, Congress

5

should provide funding to NIST to increase

the frequency of accuracy tests, create

standardized, independent testing for racially

biased error rates, and create photo databases

that facilitate such tests.

State and federal nancial assistance for police

face recognition systems should be contingent

on public reporting, accuracy and bias tests,

legislative approval—and public posting—

of a face recognition use policy, and other

standards in line with these recommendations.

A Model Face Recognition Act (p. 102), for

Congress or a state legislature, is included at

the end of the report.

2. e FBI and Department of Justice (DOJ)

should make signicant reforms to the

FBI’s face recognition system. e FBI

should refrain from searching driver’s license

and ID photos in the absence of express

approval for those searches from a state

legislature. If it proceeds with those searches,

the FBI should restrict them to investigations

of serious crimes where FBI ocials have

probable cause to implicate the search

subject. e FBI should periodically scrub its

mug shot database to eliminate the innocent,

require reasonable suspicion for state searches

of that database, and restrict those searches

to investigations of felonies. Overall access

to the database should be contingent on

legislative approval of an agency’s use policy.

e FBI should audit all searches for misuse,

and test its own face recognition system, and

the state systems that the FBI accesses, for

accuracy and racially biased error rates.

e DOJ Civil Rights Division should

evaluate the disparate impact of police face

recognition, rst in jurisdictions where it has

open investigations and then in state and

local law enforcement more broadly. DOJ

should also develop procurement guidance

for state and local agencies purchasing face

recognition programs with federal funding

e FBI should be transparent about its

use of face recognition. It should reverse

its current proposal to exempt its face

recognition system from key Privacy Act

requirements. It should also publicly and

annually identify the photo databases

it searches and release statistics on the

number and nature of searches, arrests, the

convictions stemming from those searches,

and the crimes that those searches were used

to investigate.

3. Police should not run face recognition

searches of license photos without

clear legislative approval. Many police

departments have run searches of driver’s

license and ID photos without express

legislative approval. Police should observe a

moratorium on those searches until legislatures

vote on whether or not to allow them.

Police should develop use policies for face

recognition, publicly post those policies, and

seek approval for them from city councils

or other local legislative bodies. City

councils should involve their communities

in deliberations regarding support for this

technology, and consult with privacy and

civil liberties organizations in reviewing

proposed use policies.

When buying software and hardware, police

departments should condition purchase on

accuracy and bias tests and periodic tests of

the systems in operational conditions over

the contract period. ey should avoid sole

source contracts and contracts that disclaim

vendor responsibility for accuracy.

6

The Perpetual Line-Up

All agencies should implement audits to

prevent and identify misuse and a system of

trained face examiners to maximize accuracy.

Regardless of their approach to contracting,

all agencies should regularly test their

systems for accuracy and bias.

A Model Police Face Recognition Use

Policy (p.116) is included at the end of this

report.

4. e National Institute of Standards and

Technology (NIST) should expand the

scope and frequency of accuracy tests.

NIST should create regular tests for

algorithmic bias on the basis of race, gender,

and age, increase the frequency of existing

accuracy tests, develop tests that mirror law

enforcement workows, and deepen its focus

on tests for real-time face recognition. To

help empower others to conduct testing,

NIST should develop a set of best practices

for accuracy tests and develop and distribute

new photo datasets to train and evaluate

algorithms. To help eorts to diminish

racially biased error rates, NIST should

ensure that these datasets reect the diversity

of the American population.

5. Face recognition companies should test

their systems for algorithmic bias on the

basis of race, gender, and age. Companies

should also voluntarily publish performance

results for modern, publicly available

benchmarks—giving police departments and

city councils more bases upon which to draw

comparisons.

6. Community leaders should press for

policies and legislation that protect privacy,

civil liberties, and civil rights. Citizens are

paying for police and FBI face recognition

systems. ey have a right to know how

those systems are being used.

If those agencies refuse, advocates should

take them to court. Citizens should also

press legislators and law enforcement

agencies for laws and use policies that

protect privacy, civil liberties, and civil

rights, and prevent misuse and abuse. Law

enforcement and legislatures will not act

without concerted community action.

is report provides the resources that citizens

will need to eect this change. In addition

to the City and State Backgrounders and

the Face Recognition Scorecard, a list of

questions that citizens can ask their elected

representative or law enforcement agency is in

the Recommendations (p. 70).

7

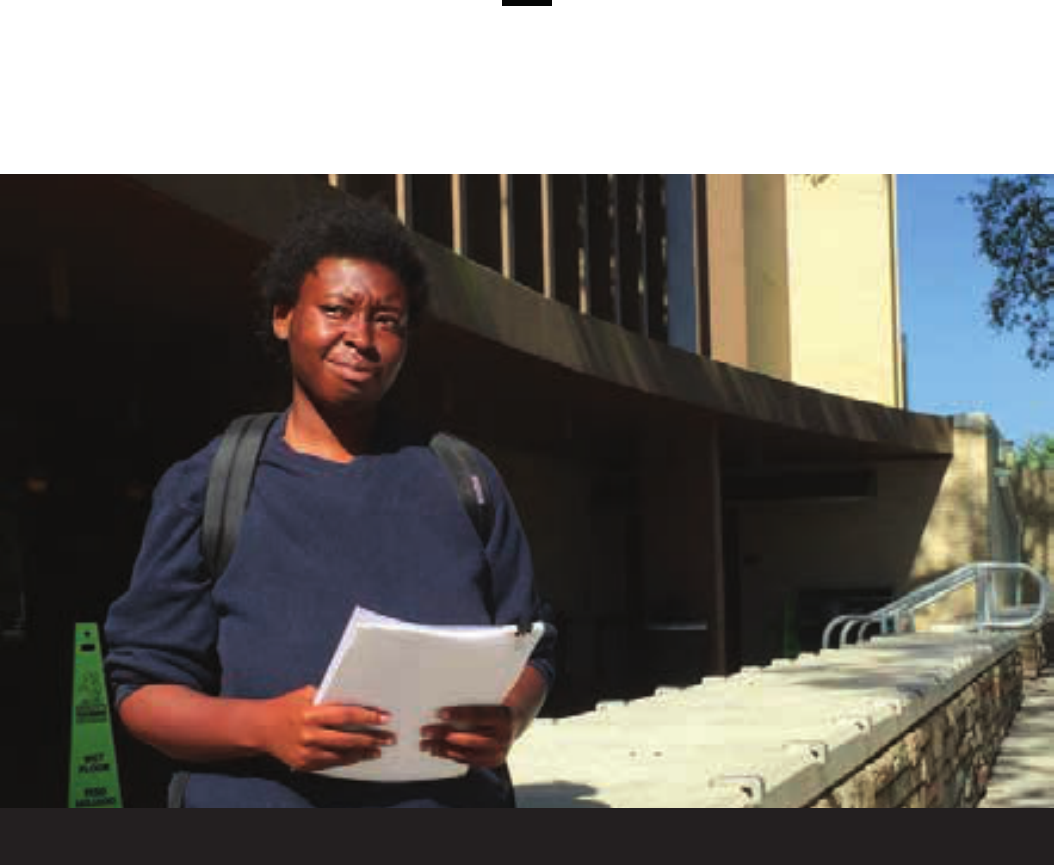

Chris Wilson is a soft-spoken Classics major

working towards her second Bachelor’s degree

at the University of South Florida. She enjoys

learning Latin and studying ancient Greece and

Rome. “I’m a history nut,” she says.

But Chris is not just a scholar—she is also a

civil rights leader. For her, social justice is at

the core of education: “A lot of students believe

that we have to put up with the way things

are—and that’s not right.” Chris sees it as her

responsibility to “pop the bubble.”

Earlier this year, Chris helped organize a protest

against the treatment of black students at the

Florida State Fair. In 2014, a 14 year-old honors

student, Andrew Joseph III, had been killed by

a passing car after being ejected by police from

the Florida State Fair along with dozens of other

students, most of them African American.

1

On February 7, 2016—the second anniversary of

Andrew’s death—Chris and three others locked

themselves together just inside the fairground

gates and called for a boycott. Police ordered them

II. INTRODUCTION

Figure 1: Chris Wilson at the University of South Florida campus. (Photo: Center on Privacy & Technology)

8

The Perpetual Line-Up

to leave. Chris and her friends stayed where they

were.

2

Chris was arrested for trespass, a misdemeanor.

e Hillsborough County Sheri ’s Oce took

her to a local station, ngerprinted her, took

her mug shot, and released her that evening.

She had never been arrested before, and so

she was informed that she was eligible for a

special diversion program. She paid a ne, did

community service, and the charges against her

were dropped.

Chris was not told that as a result of her arrest,

her mug shot has likely been added to not one,

but two separate face recognition databases run

by the FBI and the Pinellas County Sheri ’s

Oce.

3

ese two databases alone are searched

thousands of times a year by over 200 state, local

and federal law enforcement agencies.

4

e next time Chris participates in a protest, the

police won’t need to ask her for her name in order

to identify her. ey won’t need to talk to her at all.

ey only need to take her photo. FBI co-authored

research suggests that these systems may be least

accurate for African Americans, women, and young

people aged 18 to 30.

5

Chris is 26. She is black.

Unless she initiates a special court proceeding to

expunge her record, she will be enrolled in these

databases for the rest of her life.

6

What happened to Chris doesn’t aect only

activists: Increasingly, law enforcement face

recognition systems also search state driver’s

license and ID photo databases. In this way,

roughly one out of every two American adults

(48%) has had their photo enrolled in a criminal

face recognition network.

7

ey may not know it, but Chris Wilson and

over 117 million American adults are now

part of a virtual, perpetual line-up. What does

this mean for them? What does this mean for

our society? Can police use face recognition

to identify only suspected criminals—or can

they use it to identify anyone they want? Can

police use it to identify people participating in

protests? How accurate is this technology, and

does accuracy vary on the basis of race, gender or

age? Can communities debate and vote on the

use of this technology? Or is it being rolled out

in secret?

FBI and police face recognition systems have

been used to catch violent criminals and fugitives.

eir value to public safety is real and compelling.

But should these systems be used to track Chris

Wilson? Should they be used to track you?

9

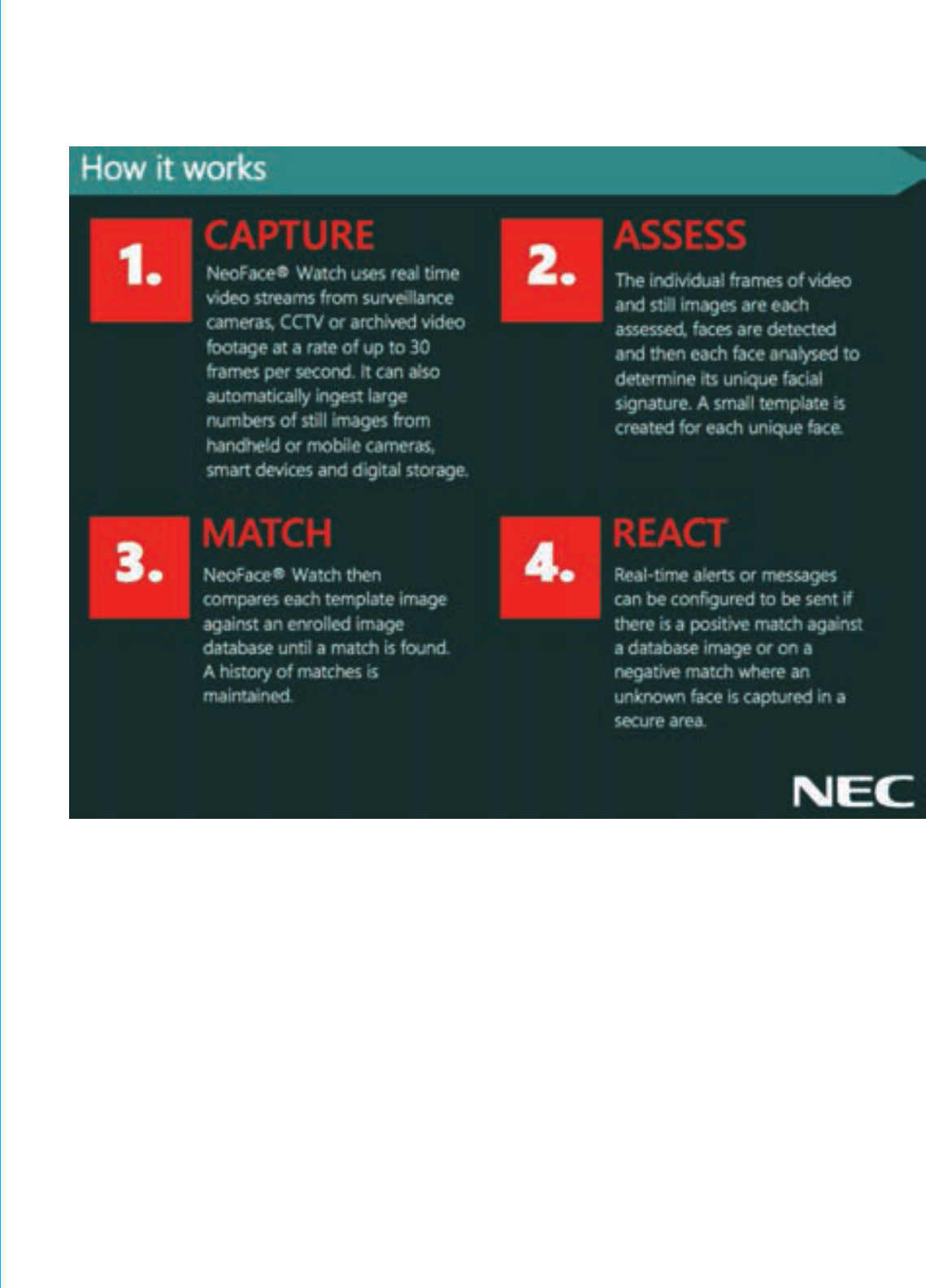

A. WHAT IS FACE RECOGNITION

TECHNOLOGY?

Face recognition is the automated process of

comparing two images of faces to determine

whether they represent the same individual.

Before face recognition can identify someone,

an algorithm must rst nd that person’s face

within the photo. is is called face detection.

Once detected, a face is “normalized”—scaled,

rotated, and aligned so that every face that the

algorithm processes is in the same position.

is makes it easier to compare the faces.

Next, the algorithm extracts features from the

face—characteristics that can be numerically

quantied, like eye position or skin texture.

Finally, the algorithm examines pairs of faces

and issues a numerical score reecting the

similarity of their features.

Face recognition is inherently probabilistic: It

does not produce binary “yes” or “no” answers,

but rather identies more likely or less likely

matches.

8

Most police face recognition systems

will output either the top few most similar

photos or all photos above a certain similarity

threshold. Law enforcement agencies call these

photos “candidates” for further investigation.

Some facial features may be better indicators of

similarity than others. Many face recognition

algorithms gure out which features matter most

through training. During training, an algorithm

is given pairs of face images of the same person.

Over time, the algorithm learns to pay more

attention to the features that were the most

reliable signals that the two images contained

the same person.

If a training set skews towards

a certain race, the algorithm

may be better at identifying

members of that group.

e make-up of a training set can inuence the

kinds of photos that an algorithm is most adept

at examining. If a training set is skewed towards

a certain race, the algorithm may be better at

identifying members of that group as compared

to individuals of other races.

9

e mathematical machinery behind a face

recognition algorithm can include millions

of variables that are optimized in the process

of training. is intricacy is what gives an

algorithm the capacity to learn, but it also makes

it very dicult for a human to examine an

algorithm or generalize about its behavior.

B. THE UNIQUE RISKS OF FACE

RECOGNITION

Most law enforcement technology tracks your

technology—your car, your phone, or your

computer. Biometric technology tracks your

body.

10

e dierence is signicant.

Americans change smartphones every two and a

half years, and replace cars every ve to six and a

half years.

11

Fingerprints are proven to be stable

III. BACKGROUND

10

The Perpetual Line-Up

for more than a decade, and are believed to be

stable for life.

12

Separately, many states’ driver’s

license renewal requirements ensure that state

governments consistently have an up-to-date

image of a driver’s face.

13

Face recognition allows

tracking from far away, in

secret, and on large numbers

of people.

Here, we can begin to see how face recognition

creates opportunities for tracking—and risks—

that other biometrics, like ngerprints, do not.

Along with names, faces are the most prominent

identiers in human society—online and

oine. Our faces—not ngerprints—are on our

driver’s licenses, passports, social media pages,

and online dating proles. Except for extreme

weather, holidays, and religious restrictions, it

is generally not considered socially acceptable

to cover one’s face; often, it’s illegal.

14

You only

leave your ngerprints on the things you touch.

When you walk outside, your face is captured by

every smartphone and security camera pointed

your way, whether or not you can see them.

Face recognition isn’t just a dierent biometric;

those dierences allow for a dierent kind of

tracking that can occur from far away, in secret,

and on large numbers of people.

Professor Laura Donohue explains that up until

the 21

st

century, governments used biometric

identication in a discrete, one-time manner to

identify specic individuals. is identication

has usually required that person’s proximity or

cooperation—making the process transparent to

that person. ese identications have typically

occurred in the course of detention or in a secure

government facility. Donohue refers to this

form of biometric identication as Immediate

Biometric Identication, or IBI. A prime example

of IBI would be the practice of ngerprinting

someone during booking for an arrest.

In its most advanced forms, face recognition

allows for a dierent kind of tracking. Donohue

calls it Remote Biometric Identication, or

RBI. In RBI, the government uses biometric

technology to identify multiple people in a

continuous, ongoing manner. It can identify

them from afar, in public spaces. Because of this,

the government does not need to notify those

people or get their consent. Identication can be

done in secret.

is is not business as usual: is is a capability

that is “signicantly dierent from that which the

government has held at any point in U.S. history.”

15

C. HOW DOES LAW

ENFORCEMENT USE FACE

RECOGNITION?

e rst successful fully automated face

recognition algorithm was developed in the

early 1990s.

16

Today, law enforcement agencies

mainly use face recognition for two purposes.

Face verication seeks to conrm someone’s

claimed identity. Face identication seeks to

identify an unknown face. is report focuses on

face identication by state and local police and

the FBI.

Law enforcement performs face identication for a

variety of tasks. Here are four of the most common:

• Stop and Identify. On patrol, a police ocer

encounters someone who either refuses or is

unable to identify herself. e ocer takes

her photo with a smartphone or a tablet,

processes that photo through software

11

installed on that device or on a squad car

computer, and receives a near-instantaneous

response from a face recognition system.

at system may compare that “probe” photo

to a database of mug shots, driver’s license

photos, or face images from unsolved crimes,

also known as an “unsolved photo le.” (As

part of this process, the probe photo may

also be enrolled in a database.) is process

is known as eld identication.

• Arrest and Identify. A person is arrested,

ngerprinted and photographed for a

mug shot. Police enroll that mug shot in

their own face recognition database. Upon

enrollment, the mug shot may be searched

against the existing entries, which may

include mug shots, license photos, and an

unsolved photo le. Police may also submit

the arrest record, including mug shot and

ngerprints, to the FBI for inclusion in its

face recognition database, where a similar

search is run upon enrollment.

• Investigate and Identify. While investigating

a crime, the police obtain a photo or video

still of a suspect from a security camera,

smartphone, or social media post—or they

surreptitiously photograph the suspect. ey

use face recognition to search that image

against a database of mug shots, driver’s

licenses, or an unsolved photo le and obtain

a list of candidates for further investigation,

or, in the case of the unsolved photo le, learn

Figure 2: A California policeman displays a mobile face recognition app. (Photo: Sandy Huaker/e New York Times/Redux)

12

The Perpetual Line-Up

if the individual is wanted for another crime.

Alternately, when police believe that a suspect

is using a pseudonym, they search a mug shot

of that suspect against these same databases.

• Real-time Video Surveillance. e police

are looking for an individual or a small

number of individuals. ey upload images

of those individuals to a “hot list.” A face

recognition program extracts faces from

live video feeds of one or more security

cameras and continuously compares them, in

real-time, to the faces of the people on the

hot list. Every person that walks by those

security cameras is subjected to this process.

When it nds a match, the system may send

an alert to a nearby police ocer. Today,

real-time face recognition is computationally

expensive and is not instantaneous.

17

Searches can also be run on archival video.

Face recognition is also used for driver’s license

de-duplication. In this process, a department of

motor vehicles compares the face of every new

applicant for a license or other identication

document to the existing faces in its database,

agging individuals who may be using a

pseudonym to obtain fraudulent identication.

Suspects are referred to law enforcement.

However, because de-duplication is typically

conducted by DMVs, not law enforcement, this use

of the technology will not be a focus of this report.

D. OUR RESEARCH

anks to the May 2016 Government

Accountability Oce report, the public now

has access to basic information about the FBI’s

face recognition programs and their privacy and

accuracy issues. (Sidebar 1.)

By comparison, the public knows very little

about the use of face recognition by state and

local police, even though many of their systems

are older, used more aggressively, and more likely

to have a greater impact on the average citizen.

No one has combined what we know about

FBI systems with information about state and

local face recognition to paint a comprehensive,

national picture of how face recognition is

changing policing in America, and the impact of

these changes on our rights and freedoms.

is report closes these gaps. It begins with

a threshold question: What uses of face

recognition present greater or fewer risks

to privacy, civil liberties, and civil rights?

After proposing a Risk Framework for law

enforcement face recognition, the report explores

the following questions, each of which is

answered in our Findings:

• Deployment. Who is using face recognition,

how often are they using it, and where

do those deployments fall on the Risk

Framework?

• Fourth Amendment. How do agencies using

face recognition protect our right to be free

from unreasonable searches and seizures?

• Free Speech. How do they ensure that face

recognition does not chill our right to free

speech, assembly, and association?

• Accuracy. How do they ensure that their

systems are accurate?

• Racial Bias. How does law enforcement

face recognition impact racial and ethnic

minorities?

• Transparency and Accountability. Are

agencies using face recognition in a way that

is transparent, accountable to the public, and

subject to internal oversight?

13

13

The Perpetual Line-Up

e FBI hosts one of the largest face recognition

databases in the country, the Next Generation

Identication Interstate Photo System (NGI-

IPS). It is also home to a unit, Facial Analysis,

Comparison, and Evaluation (FACE) Services,

that supports other FBI agents by running

or requesting face recognition searches of the

FBI face recognition database, other federal

databases, and state driver’s license photo and

mug shot databases. (is report will refer to

NGI-IPS as “the FBI face recognition database

(NGI-IPS),” and will refer to FBI FACE

Services as “the FBI face recognition unit

(FACE Services).” e network of databases that

the unit searches will be called “the FBI FACE

Services network”).

e FBI face recognition database (NGI-IPS) is

mostly made up of the mug shots accompanying

criminal ngerprints submitted to the FBI

by state, local, and federal law enforcement

agencies. It contains nearly 25 million state and

federal criminal photos.

19

Police in seven states

can run face recognition searches against the

FBI face recognition database, as can the FBI

face recognition unit.

20

e FBI face recognition unit (FACE Services)

runs face recognition searches against a network

of databases that includes 411.9 million photos.

Over 185 million of these photos are drawn from

12 states that let the FBI search their driver’s

license and other ID photos; another 50 million

are from four additional states that let the FBI

to search both driver’s license photos and mug

shots.

21

While we do not know the total number

of individuals that those photos implicate, there

are close to 64 million licensed drivers in those

16 states.

22

SIDEBAR 1:

FACE RECOGNITION AT THE FBI

e FBI has used face recognition to support FBI and state

and local police investigations since at least 2011.

18

e FBI is expanding the reach of the FACE

Services network, but the details are murky. In

October 2015, the FBI began a pilot program

to search photos against the State Department’s

passport database, but it is unclear if the FBI

is searching the photos of all 125 million U.S.

passport holders, or if it is searching a subset of

that database.

23

In a May 2016 report, the Government

Accountability Oce reported that the FBI

was negotiating with 18 additional states and

the District of Columbia to access their driver’s

license photos. In August, the GAO re-released

the report, deleting all references to the 18 states

and stating that there were “no negotiations

underway.”

24

e FBI now suggests that FBI

agents had only conducted outreach to those

states to explore the possibility of their joining the

FACE Services network.

25

e GAO report found that the FBI had failed

to issue mandatory privacy notices required by

federal law, failed to conduct adequate accuracy

of the FBI face recognition database (NGI-

IPS) and the state databases that the FBI face

recognition unit accessed, and failed to audit the

state searches of the FBI face recognition database

or any of the face recognition unit’s searches.

26

Despite these ndings, the FBI is proposing to

exempt the FBI face recognition database from key

Privacy Act provisions that guarantee Americans

the right to review and correct non-investigatory

information held by law enforcement—and the right

to sue if their privacy rights are violated.

27

15

To answer all of these questions, we submitted

detailed public records requests to over 100

law enforcement agencies across the country.

In total, our requests yielded more than 15,000

pages of responsive documents. Ninety agencies

provided responsive documents—or substantive

responses—of some kind. ese responses

suggested that at least 52 state and local law

enforcement agencies that we surveyed were now

using, or have previously used or obtained, face

recognition technology. (We will refer to these

agencies as “52 agencies.”) Of the 52 agencies,

eight formerly used or acquired face recognition

but have since discontinued those programs.

Conversely, several other responsive agencies

have opened their systems to hundreds of other

agencies.

28

To support our public records research, we

conducted dozens of interviews with law

enforcement agencies, face recognition

companies, and face recognition researchers and

conducted a fty-state legal survey of biometrics

and related surveillance laws, and an in-depth

review of the technical literature on face

recognition. We conrmed our ndings through

two site visits to law enforcement agencies with

advanced face recognition systems. (Our full

research methodology, including a breakdown

of our records requests and a template for those

requests can be found in the Methodology

section.)

After assessing these risks, this report proposes

concrete recommendations for Congress,

state legislatures, federal, state and local law

enforcement agencies, the National Institute

of Standards and Technology, face recognition

companies, and community leaders.

16

The Perpetual Line-Up

In this section, we categorize police uses of face

recognition according to the risks that they

create for privacy, civil liberties, and civil rights.

Some uses of the technology create new and

sensitive risks that may undermine longstanding,

legally recognized rights. Other uses are far less

controversial and are directly comparable to

longstanding police practices. Any regulatory

scheme should account for those dierences.

A. RISK FACTORS

e overall risk level of a particular deployment

of face recognition will depend on a variety of

factors. As this report will explain, it is unclear

how the Supreme Court would interpret the

Fourth Amendment or First Amendment to

apply to law enforcement face recognition;

there are no decisions that directly answer these

questions.

29

In the absence of clear guidance,

we can look at general Fourth Amendment and

First Amendment principles, social norms, and

police practices to identify ve risk factors for

face recognition. When applied, these factors

will suggest dierent regulatory approaches for

dierent uses of the technology.

• Targeted vs. Dragnet Search. Are searches

run on a discrete, targeted basis for

individuals suspected of a crime? Or are they

continuous, generalized searches on groups

of people—or anyone who walks in front of a

surveillance camera?

At its core, the Fourth Amendment was

intended to prevent generalized, suspicionless

searches. e Fourth Amendment was inspired

by the British Crown’s use of general warrants,

also known as Writs of Assistance, that entitled

ocials to search the homes of any colonial

resident.

30

is is why the Amendment requires

that warrants be issued only upon a showing of

probable cause and a particularized description

of who or what will be searched or seized.

31

For its part, the First Amendment does not

protect only our right to free speech. It also

protects our right to peaceably assemble,

to petition the government for a redress

of grievances, and to express ourselves

anonymously.

32

ese rights are not total

and the cases interpreting them are at times

contradictory.

33

But police use of face

recognition to continuously identify anyone on

the street—without individualized suspicion—

could chill our basic freedoms of expression and

association, particularly when face recognition is

used at political protests.

• Targeted vs. Dragnet Database. Are

searches run against a mug shot database,

or an even smaller watchlist composed of

handful of individuals? Or are searches run

against driver’s license photo databases that

include millions of law-abiding Americans?

Government searches against dragnet databases

have been among the most controversial national

security and law enforcement policies of the 21

st

century. Public protest after Edward Snowden’s

leaks of classied documents centered on the

NSA’s collection of most domestic call records in

IV. A RISK FRAMEWORK FOR

LAW ENFORCEMENT FACE RECOGNITION

17

the U.S. Notably, searches of the data collected

were more or less targeted; the call records

database was not.

34

In 2015, Congress voted to

end the program.

35

• Transparent vs. Invisible Searches. Is

the face recognition search conducted in a

manner that is visible to a target? Or is that

search intentionally or inadvertently invisible

to its target?

While they may stem from legitimate law

enforcement necessity, secret searches merit

greater scrutiny than public, transparent search-

es. “It should be obvious that those government

searches that proceed in secret have a greater

need for judicial intervention and approval than

those that do not,” writes Professor Susan Frei-

wald. As she explains, “[i]nvestigative methods

that operate out in the open may be challenged

at the time of the search by those who observe

it.” You can’t challenge a search that you don’t

know about.

36

• Real-time vs. After-the-Fact Searches.

Does a face recognition search aim to

identify or locate someone right now? Or is

it run to investigate a person’s past behavior?

Courts have generally applied greater

scrutiny—and required either a higher level

of individualized suspicion, greater oversight,

or both—to searches that track real-time,

contemporaneous behavior, as opposed to past

conduct. For example, while there is a split in

the federal courts as to whether police need

probable cause to obtain historical geolocation

records, most courts have required warrants for

real-time police tracking.

37

Similarly, while

federal law enforcement ocers obtain warrants

prior to requesting real-time GPS tracking of a

suspect’s phone by a wireless carrier, and prior

to using a “Stingray” (also known as a cell-site

simulator) which eectively conducts a real-time

geolocation search, courts have generally not

extended that standard to requests for historical

geolocation records.

38

• Established vs. Novel Use. Is a face

recognition search generally analogous

to longstanding ngerprinting practices

or modern DNA analysis? Or is it

unprecedented?

In 1892, Sir Francis Galton published

Finger Prints, a seminal treatise arguing that

ngerprints were “an incomparably surer

criterion of identity than any other bodily

feature.” Since then, law enforcement agencies

have adopted ngerprint technology for

everyday use. A comparable shift occurred in the

late 20

th

century around forensic DNA analysis.

Regulation of face recognition should take

note of the existing ways in which biometric

technologies are used by American law

enforcement.

At the same time, we should not put precedent

on a pedestal. As Justice Scalia noted in his

dissent in Maryland v. King, a case that explored

the use of biometrics in modern policing,

“[t]he great expansion in ngerprinting came

before the modern era of Fourth Amendment

jurisprudence, and so [the Supreme Court was]

never asked to decide the legitimacy of the

practice.”

40

A specic use of biometrics may be

old, but that doesn’t mean it’s legal.

B. RISK FRAMEWORK

Applying these criteria to the most common

police uses of face recognition, three categories

of deployments begin to emerge:

• Moderate Risk Deployments are more

targeted than other uses and generally

resemble existing police use of biometrics.

18

The Perpetual Line-Up

• High Risk Deployments involve the

unprecedented use of dragnet biometric

databases of law-abiding Americans.

• Very High Risk Deployments apply

continuous face recognition searches to

video feeds from surveillance footage and

police-worn body cameras, creating profound

problems for privacy and civil liberties.

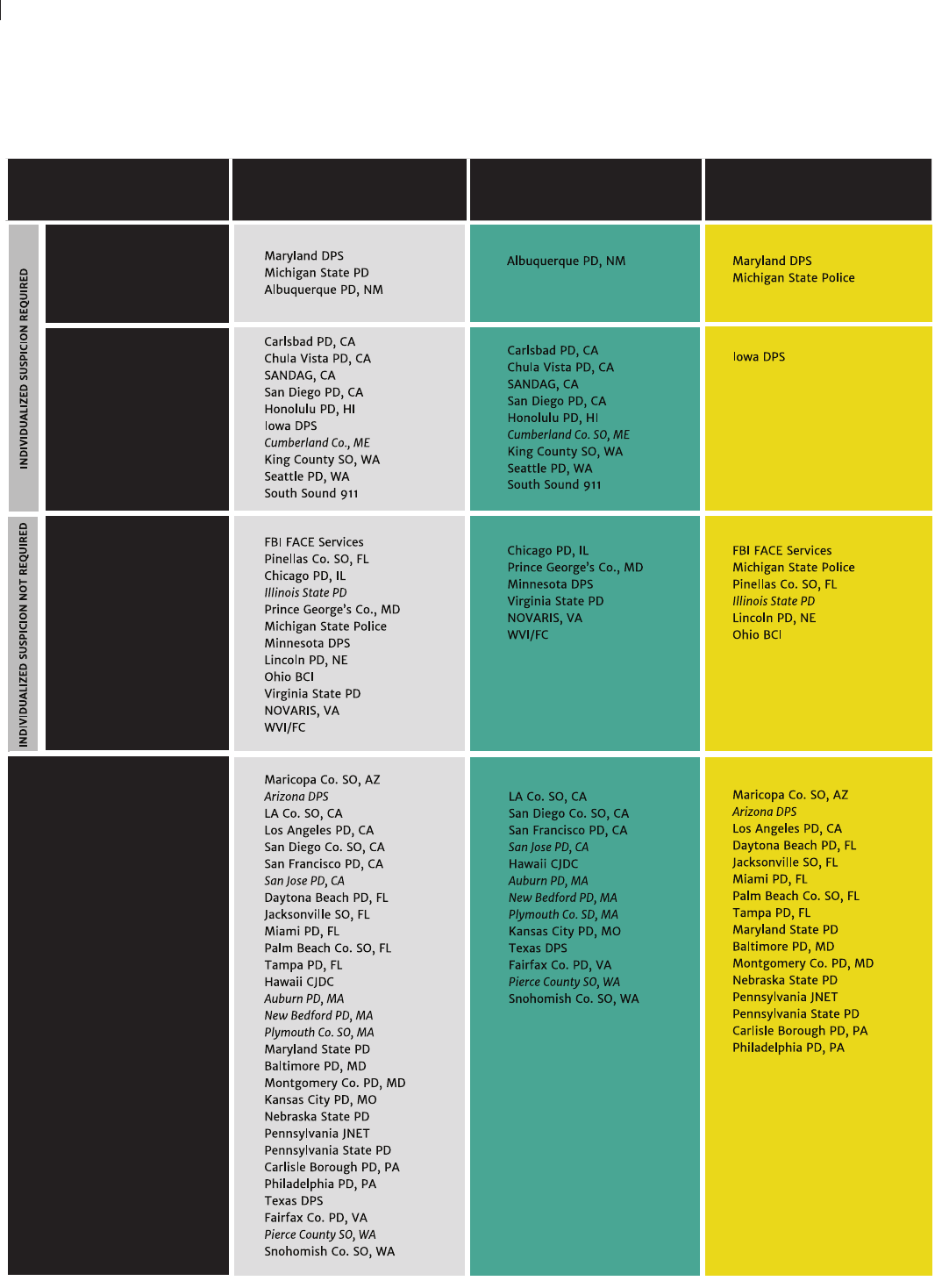

ese categories are summarized in Figure 3 and

explained below. Note that not every criterion

maps neatly onto a particular deployment.

Figure 3: Risk Framework for Law Enforcement Face Recognition.

DEPLOYMENT LESS RISK MORE RISK

Stop and Identifty

(Mug shot Database)

• Targeted Search

• Targeted Database

• Transparent

• Real-Time

• Novel Use

Arrest and Identify

(Mug shot Database)

• Targeted Search

• Targeted Database

• Established Use

• Invisible

Investigate and Identify

(Mug shot Database)

• Targeted Search

• Targeted Database

• After-the-Fact

• Established Use

• Invisible

Stop and Identify

(License Database)

• Targeted Search

• Transparent

• Dragnet Database

• Real-Time

• Novel Use

Arrest and Identify

(License Database)

• Targeted Search

• Dragnet Database

• Invisible

• Novel Use

Investigate and Identify

(License Database)

• Targeted Search

• After-the-Fact

• Dragnet Database

• Invisible

• Novel Use

Real-Time Video

Surveillance

• Targeted Database

• Dragnet Search

• Invisible

• Real-Time

• Novel Use

Historical Video

Surveillance

• Targeted Database

• After-the-Fact

• Dragnet Search

• Invisible

• Novel Use

MODERATE RISKHIGH RISKVERY HIGH RISK

19

1. MODERATE RISK

DEPLOYMENTS.

e primary characteristic of moderate risk

deployments is the combination of a targeted

search with a relatively targeted database.

When a police ocer uses face recognition to

identify someone during a lawful stop (Stop and

Identify), when someone is enrolled and searched

against a face recognition database after an arrest

(Arrest and Identify), or when police departments

or the FBI use face recognition systems to

identify a specic criminal suspect captured by

a surveillance camera (Investigate and Identify),

they are conducting a targeted search pursuant

to a particularized suspicion—and adhering to a

basic Fourth Amendment standard.

Most face recognition searches

are eectively invisible.

Mug shot databases are not entirely “targeted.”

ey’re not limited to individuals charged with

felonies or other serious crimes, and many of

them include people like Chris Wilson—people

who had charges dismissed or dropped, who

were never charged in the rst place, or who were

found innocent of those charges. In the FBI face

recognition database (NGI-IPS), for example,

over half of all arrest records fail to indicate

a nal disposition.

41

e failure of mug shot

databases to separate the innocent from the guilty—

and their inclusion of people arrested for peaceful,

civil disobedience—are serious problems that must

be addressed. at said, systems that search against

mug shots are unquestionably more targeted than

systems that search against a state driver’s license

and ID photo database.

Two of the three moderate risk deployments—

while invisible to the search subject—mirror

longstanding police practices. e enrollment

and search of mug shots in face recognition

databases (Arrest and Identify) parallels the

decades-old practice of ngerprinting arrestees

during booking. Similarly, using face recognition

to compare the face of a bank robber—captured

by a security camera—to a database of mug shots

(Investigate and Identify) is clearly comparable

to the analysis of latent ngerprints at crime

scenes.

e other lower risk deployment, Stop and

Identify, is eectively novel.

42

It is also

necessarily conducted in close to real-time. On

the other hand, a Stop and Identify search is the

only use of face recognition that is somewhat

transparent. When an ocer stops you and asks

to take your picture, you may not know that he’s

about to use face recognition—but it certainly

raises questions.

43

e vast majority of face

recognition searches are eectively invisible.

44

2. HIGH RISK DEPLOYMENTS.

High risk deployments are quite similar to

moderate risk deployments—except for the

databases that they employ. When police or

the FBI run face recognition searches against

the photos of every driver in a state, they create

a virtual line-up of millions of law-abiding

Americans—and cross a line that American law

enforcement has generally avoided.

Law enforcement ocials emphasize that they

are merely searching driver’s license photos that

people have voluntarily chosen to provide to state

government. “Driving is a privilege,” said Sheri

Gualtieri of the Pinellas County Sheri ’s Oce.

45

20

The Perpetual Line-Up

People in rural states—and states with voter

ID laws—may chafe at the idea that getting a

driver’s license is a choice, not a necessity. Most

people would also be surprised to learn that

by getting a driver’s license, they “volunteer”

their photos to a face recognition network

searched thousands of times a year for criminal

investigations.

at surprise matters: A founding principle

of American privacy law is that government

data systems should notify people about how

their personal information will be used, and

that personal data should not be used outside

of the “stated purposes of the [government

data system] as reasonably understood by the

individual, unless the informed consent of the

individual has been explicitly obtained.”

46

Most critically, however, by using driver’s

license and ID photo repositories as large-

scale, biometric law enforcement databases,

law enforcement enters controversial, if not

uncharted, territory.

Never before has federal law

enforcement built a biometric

network primarily made up of

law-abiding Americans.

Historically, law enforcement biometric

databases have been populated exclusively or

primarily by criminal or forensic samples. By

federal law, the FBI’s national DNA database,

also known as the National DNA Index System,

or “NDIS,” is almost exclusively composed of

DNA proles related to criminal arrests or

forensic investigations.

47

Over time, the FBI’s

ngerprint database has come to include non-

criminal records—including the ngerprints

of immigrants and civil servants. However,

as Figure 4 shows, even when one considers

the addition of non-criminal ngerprint

submissions, the latest gures available suggest

that the ngerprints held by the FBI are still

primarily drawn from arrestees.

e FBI face recognition unit (FACE Services)

shatters this trend. By searching 16 states’

driver’s license databases, American passport

photos, and photos from visa applications,

the FBI has created a network of databases

that is overwhelmingly made up of non-

criminal entries. Never before has federal law

enforcement created a biometric database—or

network of databases—that is primarily made up

of law-abiding Americans. Police departments

should carefully weigh whether they, too, should

cross this threshold.

48

21

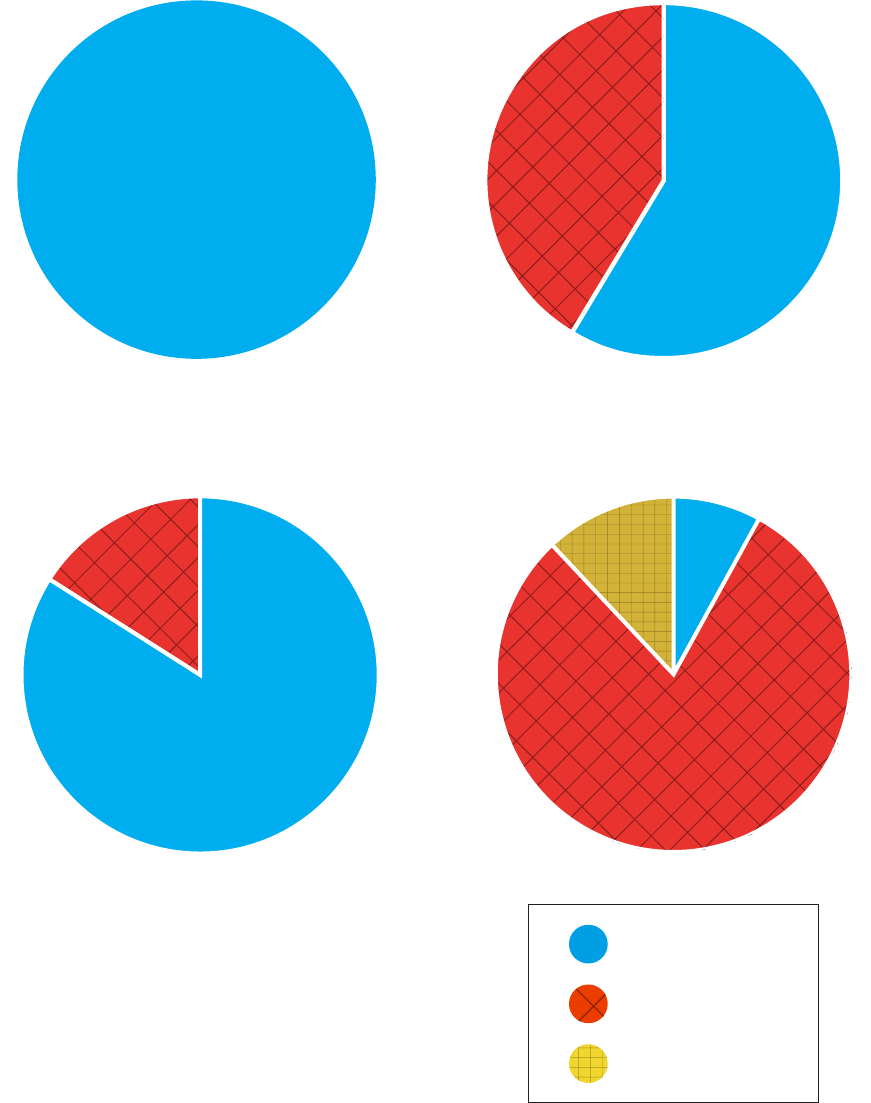

Figure 4: Criminal vs. Non-Criminal Makeup of FBI Biometric Databases & Networks.

FBI Face Recognition Database

(NGI-IPS) (2016)

FBI Face Recognition Network

(FACE Services) (2016)

100

%

41

%

59

%

84

%

16

%

80

%

12

%

8

%

FBI DNA Database

(NDIS) (2016)

FBI Fingerprint Database

(formerly IAFIS) (2016)

Criminal or Forensic

Non-Criminal

Unknown

Sources: FBI, GAO.

49

22

The Perpetual Line-Up

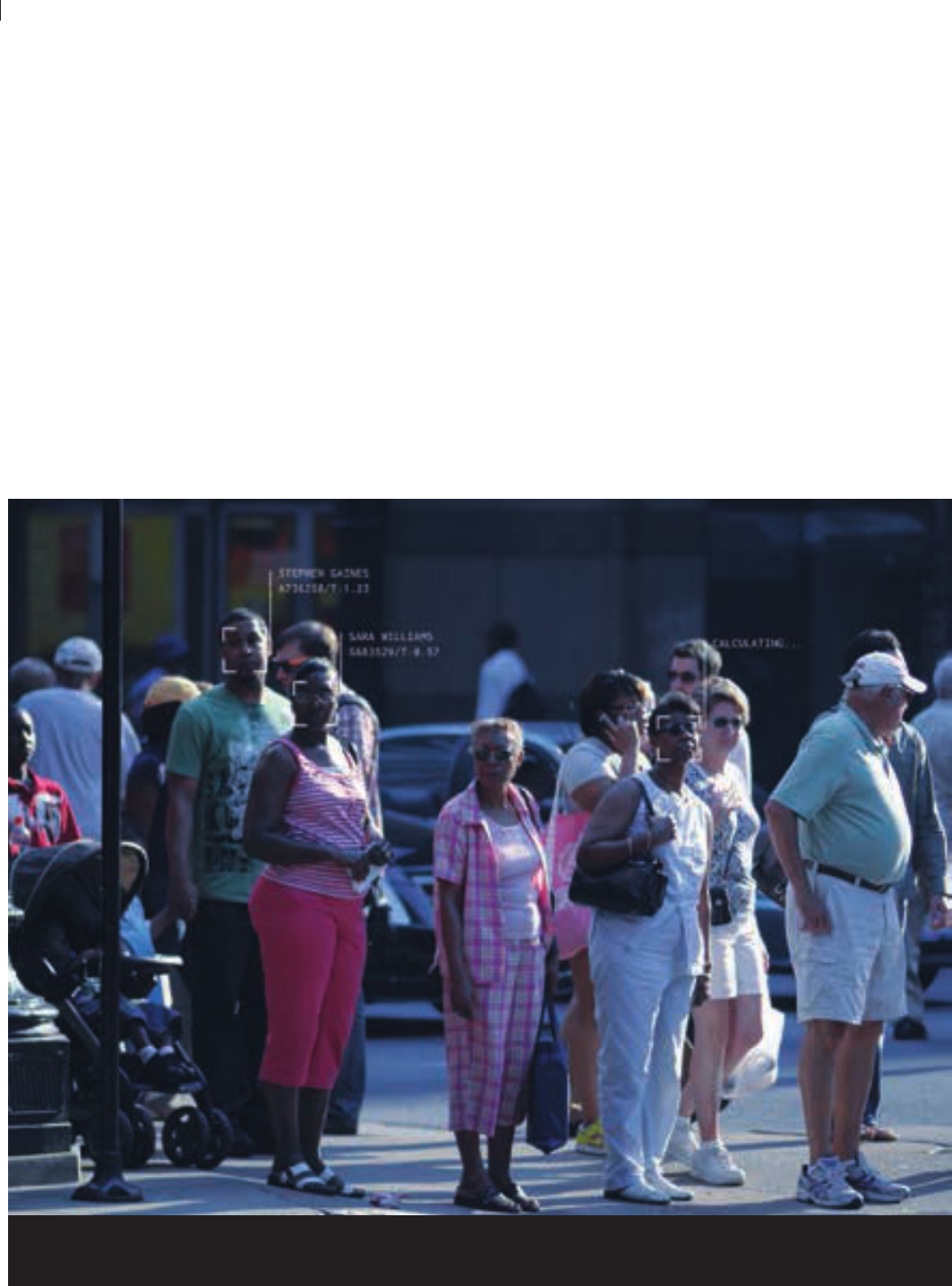

3. VERY HIGH RISK

DEPLOYMENTS.

Real-time face recognition marks a radical

change in American policing—and American

conceptions of freedom. With the unfortunate

exception of inner-city black communities—

where suspicionless police stops are all too

common

50

—most Americans have always

been able to walk down the street knowing that

police ocers will not stop them and demand

identication. Real-time, continuous video

surveillance changes that. And it does so by

making those identications secret, remote, and

potentially pervasive. What’s more, as this report

explains, real-time identications may also be

signicantly less accurate than identications in

more controlled settings (i.e. Stop and Identify,

Arrest and Identify).

51

In a city equipped with real-time face

recognition, every person who walks by a street

surveillance camera—or a police-worn body

camera—may have her face searched against

a watchlist. Right now, technology likely

limits those watchlists to a small number of

individuals. Future technology will not have

such limits, allowing real-time searches to be run

against larger databases of mug shots or even

driver’s license photos.

52

ere is no current analog—in technology or

in biometrics—for the kind of surveillance

that pervasive, video-based face recognition

can provide. Most police geolocation tracking

technology tracks a single device, requesting

the records for a particular cell phone from a

wireless company, or installing a GPS tracking

device on a particular car. Exceptions to that

trend—like the use of cell-tower “data dumps”

and cell-site simulators—generally require either

a particularized request to a wireless carrier or

the purchase of a special, purpose-built device

(i.e., a Stingray). A major city like Chicago may

own a handful of Stingrays. It reportedly has

access to 10,000 surveillance cameras.

53

If cities

like Chicago equip their full camera networks

with face recognition, they will be able to track

someone’s movements retroactively or in real-

time, in secret, and by using technology that

is not covered by the warrant requirements of

existing state geolocation privacy laws.

54

23

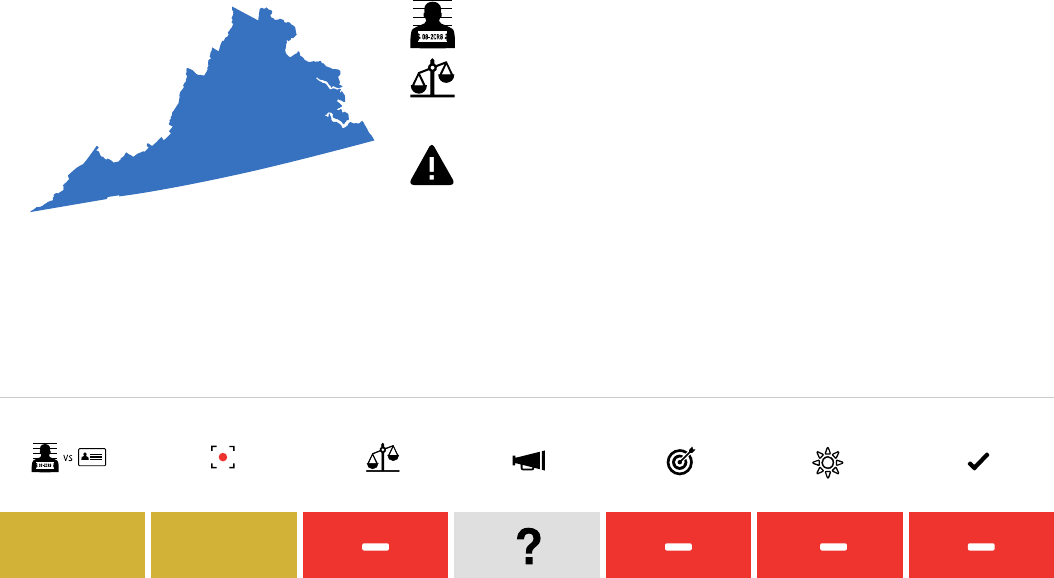

We studied the (1) Deployment of face

recognition technology—namely how many

agencies use face recognition, how often they

use it, and the risk level of those uses. We also

studied the measures that agencies and other

stakeholders apply to protect (2) our Fourth

Amendment rights and (3) our right to Free

Speech, and evaluated the steps they took to

protect against (4) Accuracy problems and the

potential for (5) Racial Bias in error rates and

in use more generally. Finally, we studied the (6)

Transparency & Accountability provisions in

place at agencies using the technology.

is section outlines our top-level ndings in

each of these areas. In our Face Recognition

Scorecard, and in the City and State

Backgrounders we evaluate how each of 25

specic agencies, plus the FBI performs in these

same elds.

55

e criteria for the scores are

described in their corresponding subsections. Two

of the subsections, Deployment and Transparency

& Accountability, are measured with two separate

scores, whereas one subsection, Racial Bias, is not

scored at all. (Our full scorecard methodology can

be found in the report on page 93.)

Before proceeding further, a disclaimer is in

order. Our ndings are based on 15,000 pages

of documents provided in response to over 100

records requests. Many of those records were

partial, redacted, or otherwise incomplete. We

have made extensive eorts to give state and

local police departments the ability to review

and correct our conclusions regarding their

face recognition systems, but it is inevitable

that errors and misunderstandings will occur.

We invite agencies to contact the authors

with corrections and clarications—and

improvements to their systems—so that this

report may be updated accordingly.

A. DEPLOYMENT

Neil Stammer was a fugitive wanted for child

abuse and kidnapping who had evaded capture

for 14 years after failing to show up for his

arraignment. en, in 2014, a State Department

ocial with the Diplomatic Security Service

ran the FBI’s wanted posters through a database

designed to detect passport fraud—and got a hit

for Kevin Hodges, an American living in Nepal.

It was Stammer, who’d been living in Nepal for

years under a pseudonym. He was arrested and

returned to the United States to face charges.

56

e year before Stammer was caught, on the

other side of the world, the Los Angeles Police

Department announced the installation of

16 new surveillance cameras in “undisclosed

locations” across the San Fernando Valley. e

cameras were mobile, wireless, and programmed

to support face recognition “at distances of up to

600 feet.”

57

LA Weekly reported that they fed into

the LAPD’s Real-time Analysis and Critical

Response Center, which would scan the faces in

the feed against “hot lists” of wanted criminals or

“documented” gang members.

58

It appears that

every person who walks by those cameras has

her face searched in this way.

V. FINDINGS & SCORECARD

24

The Perpetual Line-Up

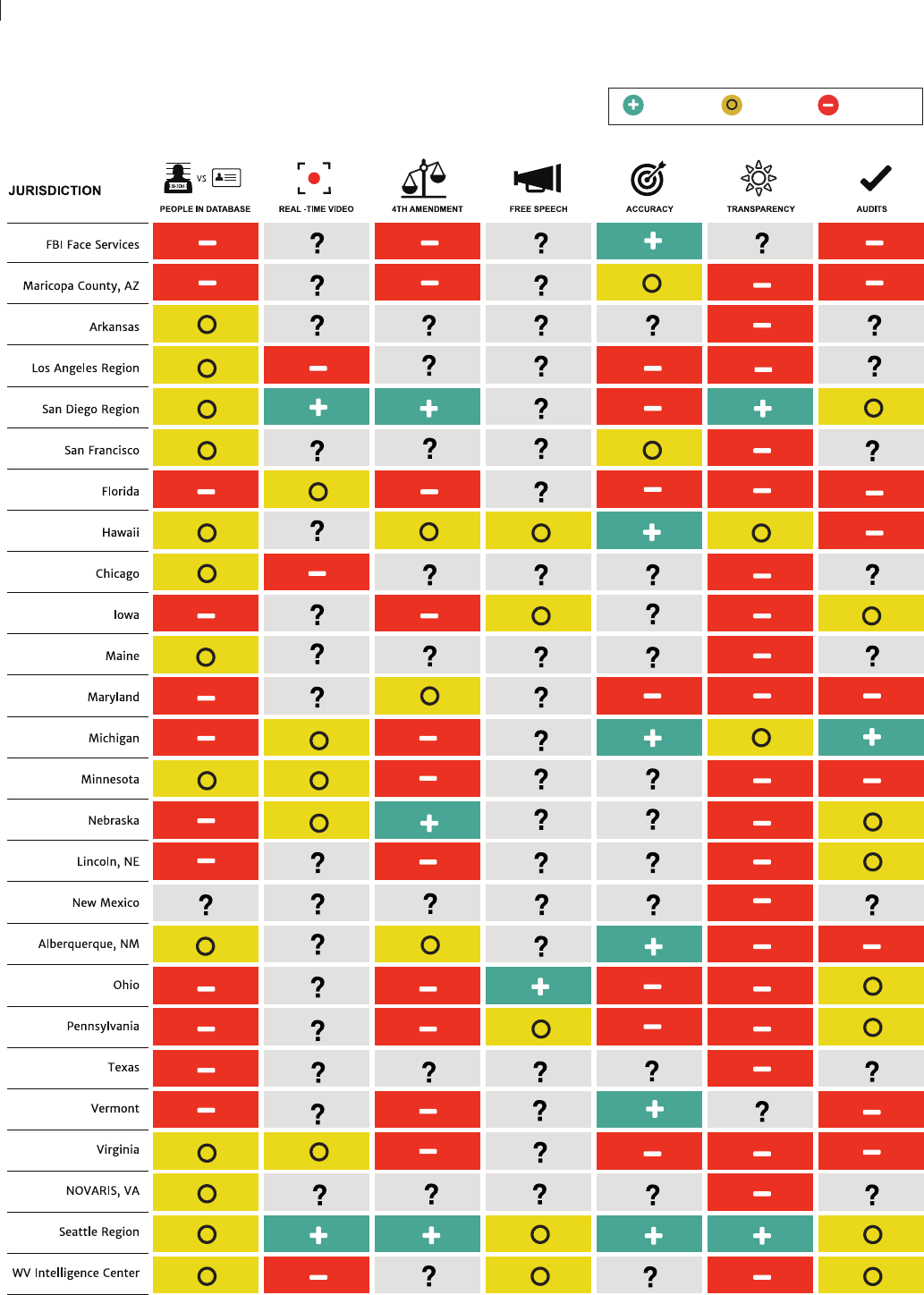

FACE RECOGNITION SCORECARD

Positive Caution Negative

25

What agencies are using face recognition for law

enforcement, how often are they using it, and

how risky are those deployments?

When proponents of face recognition answer

these questions, they often cite cases like Neil

Stammer’s: A felon, long-wanted for serious

crimes, is nally brought to justice through

the last-resort use of face recognition by a

sophisticated federal law enforcement agency.

59

e LAPD’s system suggests a more sobering

reality: Police and the FBI use face recognition

for routine, day-to-day law enforcement. And

state and local police, not the FBI, are leading

the way towards the most advanced—and

highest risk—deployments.

1. How many law enforcement agencies use

face recognition?

We estimate that more than one in four of

all American state and local law enforcement

agencies can run face recognition searches of

their own databases, run those searches on

another agency’s face recognition system, or have

the option to access such a system.

60

Some of the longest-running and largest systems

are found at the state and local level. e Pinellas

County Sheri ’s Oce in Florida, for example,

began implementing its current system in 2001.

61

Over 5,300 ocials from 242 federal, state, and

local agencies have access to the system.

62

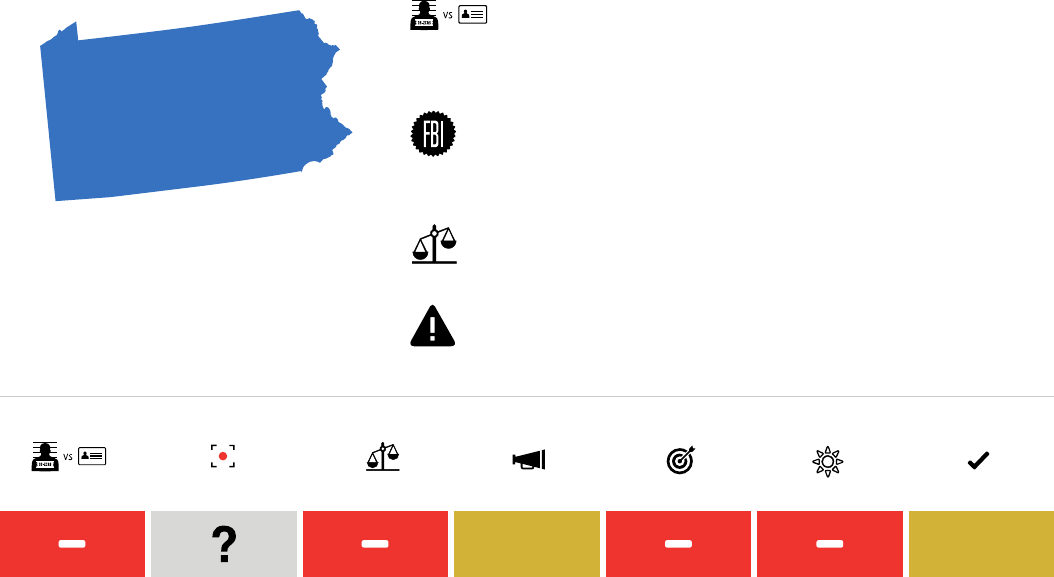

In

Pennsylvania, ocials from over 500 agencies

already use the state’s face recognition system,

which is open to all 1,020 law enforcement

agencies in the state.

63

Many federal agencies access state face

recognition systems. While the GAO reports

that the FBI face recognition unit (FACE

Services) searches 16 state driver’s license

databases,

64

this is not a complete picture of the

FBI’s reach into state systems: We found that,

after undergoing training, FBI agents in Florida

eld oces have direct access to the Pinellas

County Sheri ’s Oce system, which can run

searches against all of Florida driver’s license

photos. Notably, the GAO does not identify

Florida as forming part of the FACE Services

network.

65

It is possible that other eld oces

can access other state systems, such as those in

Pennsylvania and Maryland.

66

e Department of Defense, the Drug

Enforcement Administration, Immigration and

Customs Enforcement, the Internal Revenue

Service, the Social Security Administration, the

U.S. Air Force Oce of Special Investigations,

and the U.S. Marshals Service have all had access

to one or more state or local face recognition

systems.

67

2. How often do law enforcement agencies

use face recognition?

Face recognition searches are routine at the

federal and state level. FBI face recognition

searches of state driver’s license photos are

almost six times more common than federal

court-ordered wiretaps.

68

From August 2011

to December 2015, the FBI face recognition

unit (FACE Services) ran close to 214,920

face recognition searches, including 118,490

searches of its own database and 36,420 searches

against the 16 state driver’s license and mug

shot databases. e remainder was run against

the Department of Defense database and the

Department of State’s visa and passport photo

databases.

69

In its rst eight months of operation, Ohio’s

system was used 6,618 times by 504 agencies,

though its usage rate has since gone down—for

the rst four months of 2016, the system was

searched 1,429 times by 104 dierent agencies.

70

26

The Perpetual Line-Up

San Diego agencies run an average of around

560 searches of the San Diego Association of

Government’s system each month.

71

Pinellas

County’s system may be the most widely

used; its users conduct around 8,000 searches

per month. is appears to be much more

frequent than the searches run by the FBI face

recognition unit—almost twice as often, on

average.

73

We have only a partial sense of how eective

these searches are. ere are no public statistics

on the success rate of state face recognition

systems. We do know the number of FBI

searches that yielded likely candidates—

although we do not know how many actual

identications resulted from those potential

matches. e statistics are nonetheless striking:

Of the FBI’s 36,420 searches of state license

photo and mug shot databases, only 210

(0.6%) yielded likely candidates for further

investigations. Overall, 8,590 (4%) of the FBI’s

214,920 searches yielded likely matches.

74

3. How risky are those deployments?

We found that a large number of police

departments are engaging in high risk

deployments, and that several of the agencies are

actively exploring real-time video surveillance.

a) Moderate Risk Deployments

Of the 52 agencies we surveyed which were

now using or had previously used or obtained

face recognition technology, we identied 29

that are deploying face recognition under a

Moderate Risk model—Stop and Identify,

Arrest and Identify, and/or Investigate and

Identify o of mug shot databases. Most of the

agencies use their systems in a variety of ways.

Only one current system, used by the San Diego

Association of Governments (SANDAG), is

designed to be used only for Stop and Identify

searches.

75

None of the agencies indicated that its mug

shot database was limited to individuals arrested

for felonies or other serious crimes. Only one

agency, the Michigan State Police, deleted mug

shots of individuals who are not charged or

found innocent.

76

e norm, rather, is reected

in an agency like the Pinellas County Sheri ’s

Oce. Its mug shot database is not scrubbed to

eliminate cases that did not result in conviction.

To be removed from the database, individuals

need to obtain an expungement order—a process

that can take months to be resolved.

77

b) High Risk Deployments

High risk deployments—whether Stop and

Identify, Arrest and Identify, or Investigate

and Identify—are typied by their access to

state driver’s license and ID photo databases.

Our requests revealed 19 state or local law

enforcement agencies in eight states allow

face recognition searches of these databases.

Combining that with recent information from

the GAO, and earlier reporting that we veried

against the GAO report or through our own

research, we identied 26 states that enroll their

residents in a virtual line-up.

78

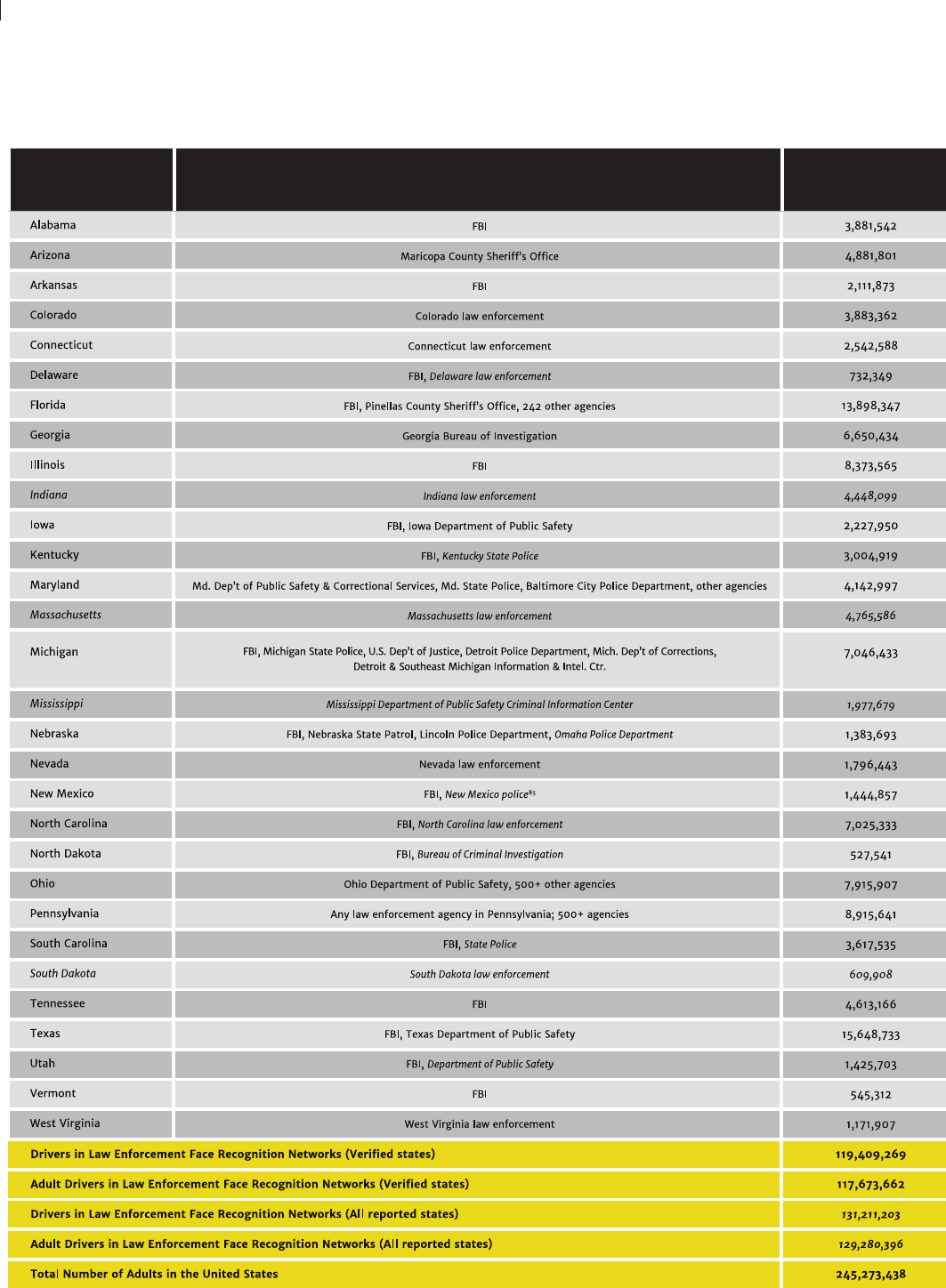

In 2014, there were 119,409,269 drivers in these

states, of whom 117,673,662 were adults aged

18 or older and 1,736,269 were minors aged 17

or younger.

79

e U.S. Census estimated that in

2014, there were 245,273,438 American adults

in the country.

80

is means that, at a minimum,

roughly 1 in 2 American adults (48%) have

had their photos enrolled in a criminal face

recognition network.

27

e gure is likely larger than that. In 2013, the

Washington Post and the Cincinnati Enquirer

conducted similar surveys that agged four other

states—Indiana, Massachusetts, Mississippi

and South Dakota—that allowed access but that

we were not able to verify.

81

If all four of those

states continue to grant access, the total number

of licensed drivers in face recognition networks

would increase to 131,211,203, of whom

129,280,396 were adults.

82

at comes out to

53% of the adult population.

83

c) Very High Risk Deployments

In May 2016, one of the world’s leading face

recognition companies reportedly entered into

an agreement with the city government of

Moscow, Russia. e company, called N-Tech.

Lab, would test their software on footage from

Moscow’s CCTV cameras. “People who pass by

the cameras are veried against the connected

database of criminals or missing persons,” the

company’s founder said. “If the system signals

a high level of likeness, a warning is sent to a

police ocer near the location.” Following the

trial, the company will reportedly install its

software on Moscow’s CCTV system. e city

has over 100,000 surveillance cameras.

86

Is real-time video surveillance like that seen in

Moscow coming to major American cities? e

answer to this question is likely “yes.”

In the U.S., no police department other than

the LAPD openly claims to use real-time

face recognition. But our review of contract

documents and other reports suggests that at least

four other major police departments have bought

or expressed plans to buy real-time systems.

• In 2012, the West Virginia Intelligence

Fusion Center purchased a system with

the ability to “automatically monitor video

surveillance footage and other video for

instances of persons of interest.”

87

• In a 2012 grant application, the Chicago

Police Department requested funds for high-

end video processing servers “congured

to process video analytics and facial

recognition… to allow for real-time analysis

of simultaneous high quality video streams.”

88

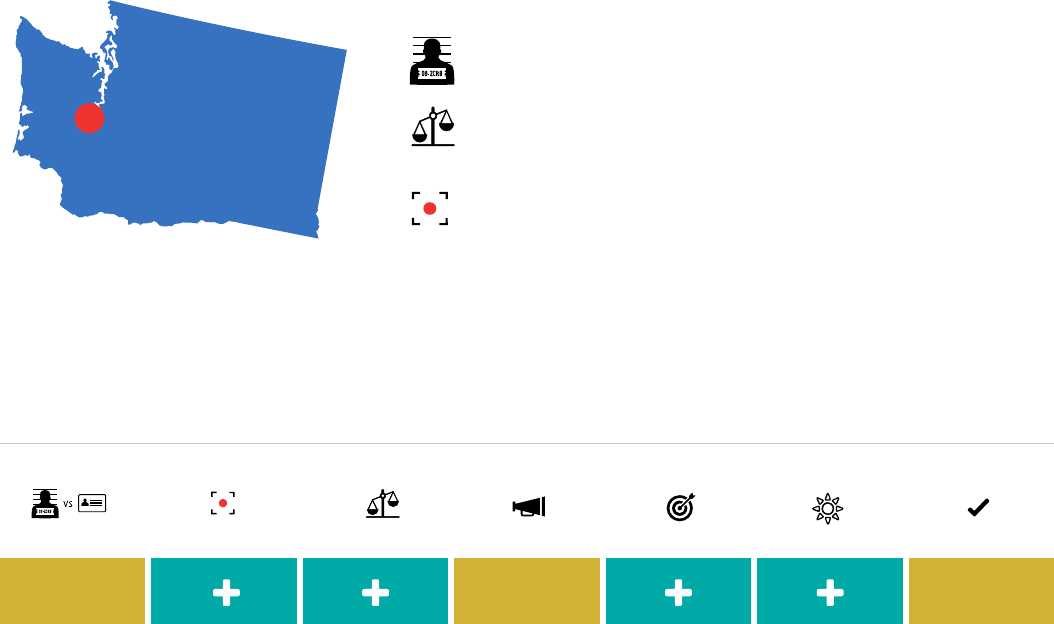

• In 2012, South Sound 911 in Washington

state wrote in its Request for Proposals for

face recognition capabilities: “e system

should have the ability to do facial recognition

searches against live-feed video.”

89

However,

the nal manual for the face recognition

system, which was adopted by the Seattle

Police Department, states that it “may not be

used to connect with ‘live’ camera systems.”

90

• e Dallas Area Rapid Transit police

announced plans to deploy real-time face

recognition software throughout its system

sometime in 2016.

91

is means that ve major American police

departments either claim to use real-time video

surveillance, have bought the necessary hardware

and software, or have expressed a written interest

in buying it.

e supply exists to meet this demand. Almost

all major face recognition companies advertise

real-time face recognition systems. Specically:

• NEC, the top performer in NIST

accuracy tests,

92

advertises that “[f]ace

recognition can do far more than is generally

understood,” and oers an “application for

real-time video surveillance” that can

“[d]etect[] subjects in a crowd in real time.”

93

28

The Perpetual Line-Up

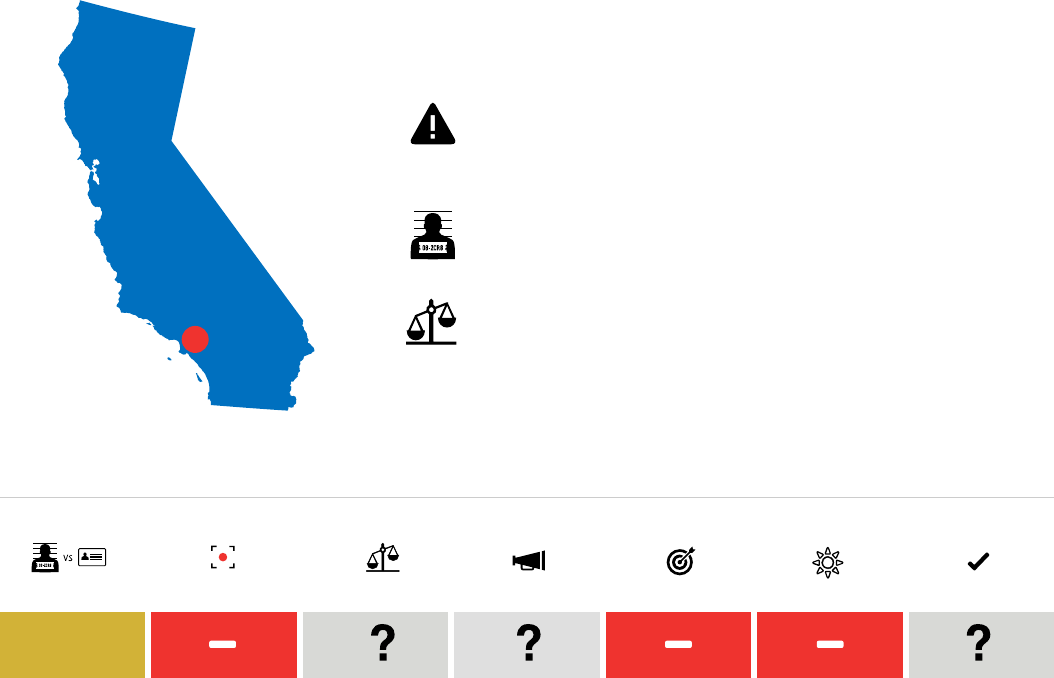

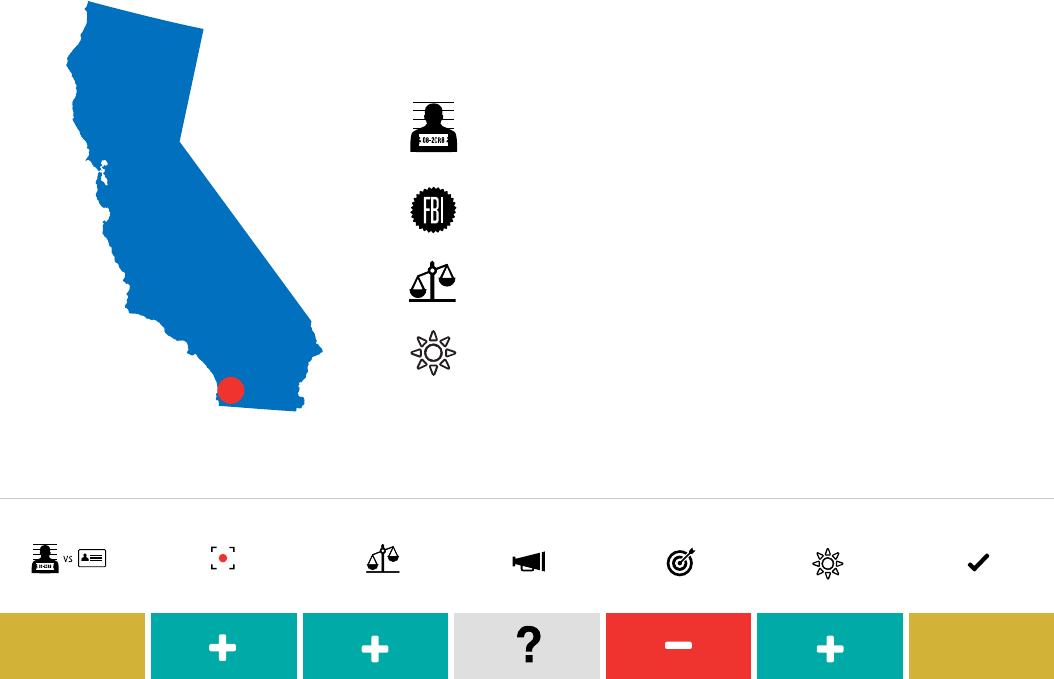

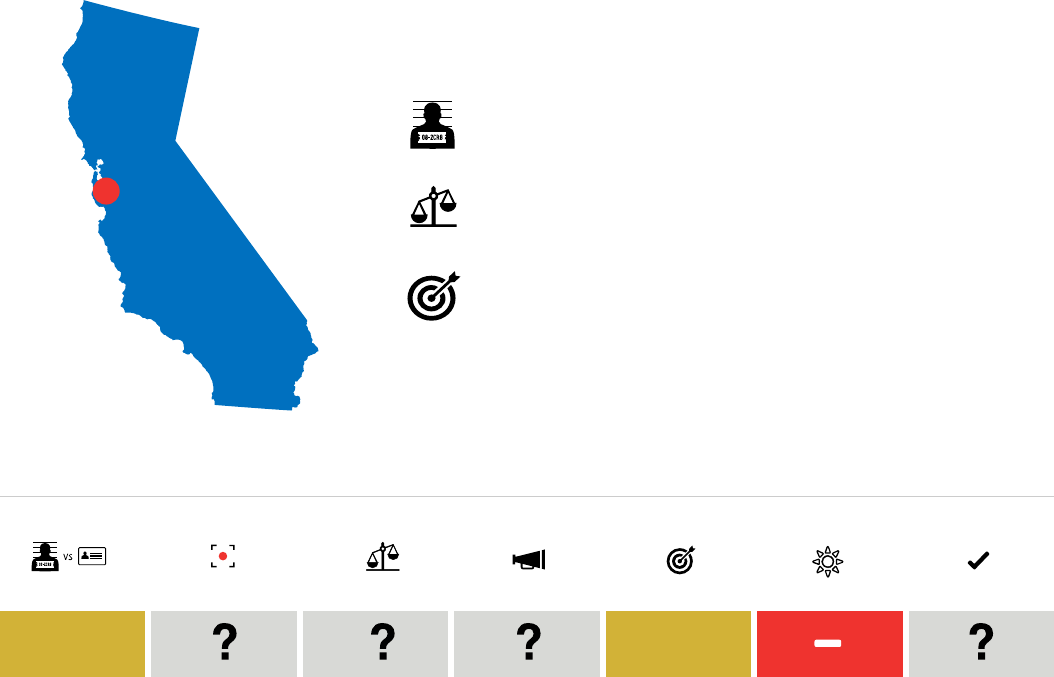

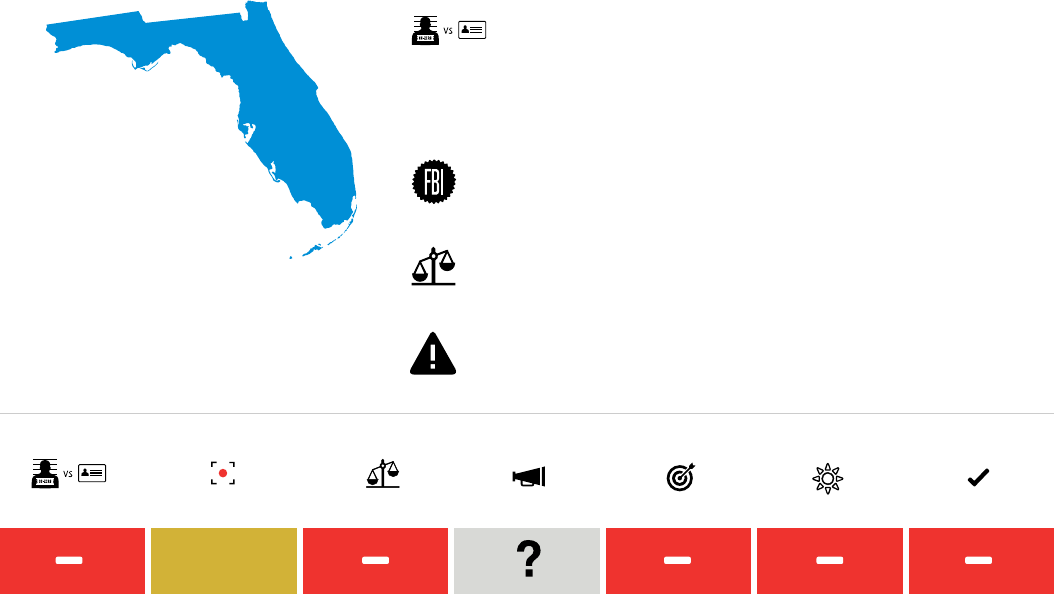

Figure 5: Drivers and Adults in Law Enforcement Face Recognition Networks (2014)

States and agencies reported to allow or run searches of license and ID photos are in italics

84

Note: is is not an exhaustive accounting of law enforcement access to driver’s license photo databases. Other states may allow this access that were not identied in our research.

Sources: GAO, FOIA documents, U.S. Dep’t of Transportation, Federal Highway Administration, Washington Post, Cincinnati Enquirer, Police Executive Research Forum

STATE LICENSE

PHOTO DATABASE

WHAT LAW ENFORCEMENT AGENCIES CAN

RUN OR REQUEST FACE RECOGNITION SEARCHES?

NUMBER OF

DRIVERS (2014)

29

• Cognitec advertises its “FaceVACS—

VideoScan” solution to “instantly detect,

track, recognize and analyze people in live

video streams or video footage.”

94

• 3M Cogent recently introduced a new “3M

Live Face Identication System” that “uses

live video to match identities in real time . . .

e system automatically recognizes multiple

faces . . . to identify individual people from

dynamic, uncontrolled environments.”

95

• Safran Identity & Security oers Morpho

Argus, a “real-time video screening system,

processing faces captured within live or pre-

recorded video streams.”

96

• Dynamic Imaging has advertised a system

add-on that would “support the ability to

perform facial recognition searches against

live-feed video.”

97

• DataWorks Plus claims to be able to

“[r]apidly detect faces in live video

surveillance monitoring for face

recognition.”

98

e proliferation of police body-worn cameras

presents another opportunity for real-time face

recognition. Rick Smith, the CEO of Taser, the

leading manufacturer of body cameras, recently

told Bloomberg Businessweek that he expects

real-time face recognition o of live streams

from body cameras to eventually become a

reality.

99